Research Unit MDC

Integrative imaging data sciences: we focus on integrating heterogeneous imaging data across modalities, scales, and time. They develop concepts and algorithms for generic processing, stitching, fusion, and visualization of large, high-dimensional datasets.

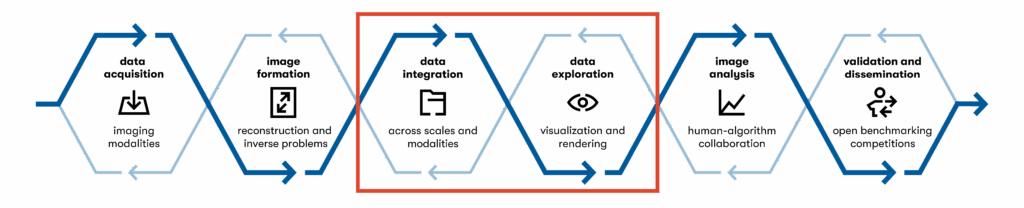

The amount of image data, algorithms and visualization solutions is growing vastly. This results in the urgent demand for integration across multiple modalities and scales in space and time. We develop and provide HI solutions that can handle the very heterogeneous image data from the research areas of the Helmholtz Association without imposing restrictions on the respective image modalities. To lay the groundwork for the implementation of HI solutions, our team at MDC will focus on the following research topics:

- Develop concepts and algorithms for handling and generic processing of high-dimensional datasets.

- Develop algorithms for large, high-dimensional image data stitching, fusion and visualization

Our “Integrative Imaging Data Science” Group specializes on new concepts, mathematical approaches and representations for large scale image data. Our work includes the development of algorithms, computational resources and visualization solutions across scales in space, time and modalities. We bundle expertise in:

- concepts and algorithms for handling, generic processing and representation of high-dimensional datasets

- image analysis and visualization across scales

- frameworks for large data management, image analysis and data abstraction

Publications

Other Research

Projects

Helmholtz Imaging Projects are granted to cross-disciplinary research teams that identify innovative research topics at the intersection of imaging and information & data science, initiate cross-cutting research collaborations, and thus underpin the growth of the Helmholtz Imaging network. These annual calls are based on the general concept for Helmholtz Imaging and are in line with the future topics of the Initiative and Networking Fund (INF).

Research Unit DESY

The Research Unit at DESY focuses on the early stages of the imaging pipeline, developing methods for advanced image reconstruction, including the optimization of measurements and the combination of classical methods with data-driven approaches.

Our goal is to drag out a maximal amount of (quantitative) information from given or designed measurements.

Research Unit DKFZ

The Research Unit at DKFZ focuses on the downstream stages of the imaging pipeline, developing robust methods for automated image analysis and emphasizing rigorous validation.

Our goal is to enable trustworthy and generalizable AI across scientific imaging domains.

Publications

Helmholtz Imaging captures the world of science. Discover unique data sets, ready-to-use software tools, and top-level research papers. The platform’s output originates from our research groups as well as from projects funded by us, theses supervised by us and collaborations initiated through us. Altogether, this showcases the whole diversity of Helmholtz Imaging.