UQOB – Uncertainty Quantification in Object-detection Benchmark

What is the project about?

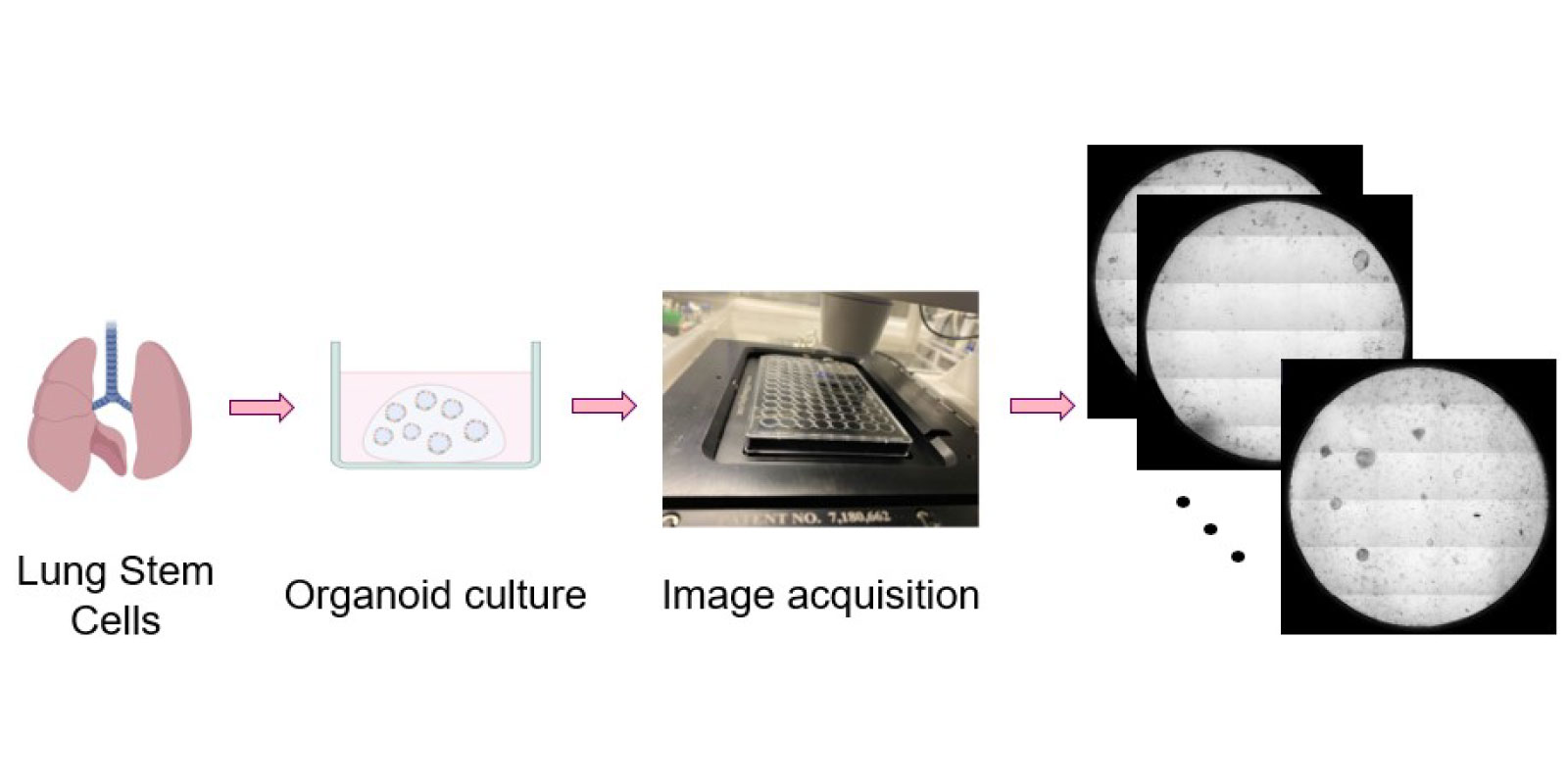

We propose a benchmark dataset for object detection and Uncertainty Quantification (UQ) in 2D microscopy images of organoids. The dataset will include over 800 high-resolution images with more than 120,000 organoids annotated by multiple annotators. This will support robust AI development and evaluation in biomedical imaging.

What motivated you to apply for UNLOCK, and how does the project align with the initiative’s vision?

We want to create a high-quality benchmark dataset that supports robust AI development and evaluation in biomedical imaging, leveraging Helmholtz’s resources and promoting cross-center and cross-disciplinary collaboration.

What gap in the scientific community led to the creation or expansion of this benchmarking dataset?

Existing detection benchmarks lack multi-rater annotations and proper evaluation of UQ; this dataset aims to fill that gap.

How does the benchmark dataset support reproducibility, robustness, and fairness in AI research?

We will provide a publicly available dataset and propose a standardized annotation protocol for the multi-rater setting. We will also conform to FAIR metadata and provide open-source baselines.

What is the project’s structure — from data curation to expected outputs such as publications or competitions?

We plan the following steps:

- Data collection, including selecting already existing microscopy images of lung and colon organoids and generating new ones.

- Annotation and multi-class labeling, with multiple raters, following a protocol including automatic pseudo-labeling, and alignment annotation sprints

- Baseline model development for detection models and UQ

- Public release via Kaggle as a Kaggle challenge

In what ways does the project foster cross-domain, cross-center, or interdisciplinary collaboration?

It involves Helmholtz Munich and DKFZ, integrating lung and cancer research. Organoid research is also very active in other research domains (see e.g., Hereon/ Helmholtz Imaging). The benchmark will also impact AI fundamental method development, particularly in the UQ field.

What impact does the project aim to achieve — within Helmholtz and across the broader research and industry community?

The benchmark will help develop better AI in biomedical imaging, and in particular, organoid research, with the promise of enabling high-throughput, better standardized, and reproducible research. It will also provide a new interesting benchmark for developing and testing new UQ AI methods.

Other projects

ForestUNLOCK: A multi-modal Multiscale Benchmark Dataset for AI-Driven Boreal Forest Monitoring and Carbon Accounting

Building the first consistent multi-modal single tree benchmark for forest structure and carbon stock assessments of the northern boreal forestPero – Unlocking ML Potential: Benchmark Datasets on Perovskite Thin Film Processing

Addressing the lack of standardized, FAIR benchmark datasets in perovskite photovoltaics. Pero enables reproducible AI models for efficiency prediction, material classification, and defect detection, which are critical for industrial scaling of sustainable energy technologies.