Published on 05.05.2025

Why Image Data Integrity Matters: Insights from Ella Bahry from the Engineering and Support Unit at MDC

Reproducibility is not just a buzzword; it is the foundation of trustworthy science and the open science movement. Yet, ensuring that research findings can be independently verified is a challenge that requires both cultural and technological shifts. For Deborah Schmidt, who leads the Engineering and Support Unit at the Max Delbrück Center (MDC), and her team, open science is a matter close to their heart and plays an important role in their daily work, shaping the way researchers work with image data.

Their passion for open science and data integrity is evident in their contributions to the Helmholtz Imaging community, where the Unit develops computational tools that enhance the quality and transparency of image-based research. Most recently, together with Dr. Ella Bahry and Jan Philipp Albrecht, Research Software Scientists, and Maximilian Otto, Student Worker, Deborah co-organized the Image Prevalidation Workshop as part of the Helmholtz Reproducibility Workshop on March 14, 2025, an event dedicated to exploring the essence of reproducible science and its transformative potential (find the workshop slides here).

The Importance of Image Prevalidation

Science produces many images. Across the different research fields and disciplines images and image data are essential for gaining knowledge. However, hidden issues in image quality, such as inconsistencies, artifacts, or hidden quality issues, can lead to incorrect conclusions. The Image Prevalidation Workshop aimed to tackle this problem head-on. “The goal of image prevalidation is simple: detect problems before they impact research results,” explains Deborah. “We want to equip researchers with the tools to assess the integrity of their image data before diving into analysis.”

A Commitment to Open Science

The team’s advocacy for open and reproducible science goes far beyond a single event. The Unit has been instrumental, amongst other things, in developing Album, a decentralized platform for sharing digital scientific solutions. “We want to make computational tools and methods openly accessible,” Deborah notes. “Open science isn’t just about publishing results, it’s about making the entire research process transparent and reproducible.”

Interview with Ella Bahry

To dive deeper into why image validation matters and how open science is shaping reproducibility, we spoke with Ella Bahry. Here’s what she had to say about her work, the challenges in imaging research, and the future of reproducible science.

What motivated you to co-organize the Image Prevalidation Workshop?

Working in the Helmholtz Imaging Engineering and Support Unit at MDC, I constantly encounter diverse image datasets. I noticed a recurring pattern: critical insights about the data and its quality, as well as inconsistencies often surface very late in the process. Researchers invest immense effort in planning, protocols, and imaging, yet that crucial intermediate step – really getting to know the data through prevalidation – is often overlooked.

This missing step leads to inefficiency. Imagine prevalidating just a small test dataset before a large-scale imaging run; you might discover ways to optimize your protocol for much better images. Similarly, prevalidating the full dataset afterwards – checking examples, distributions, metadata, and identifying outliers – prevents pitfalls. Importantly, it empowers researchers to truly understand their data, enabling better decisions about preprocessing, analysis, and visualization.

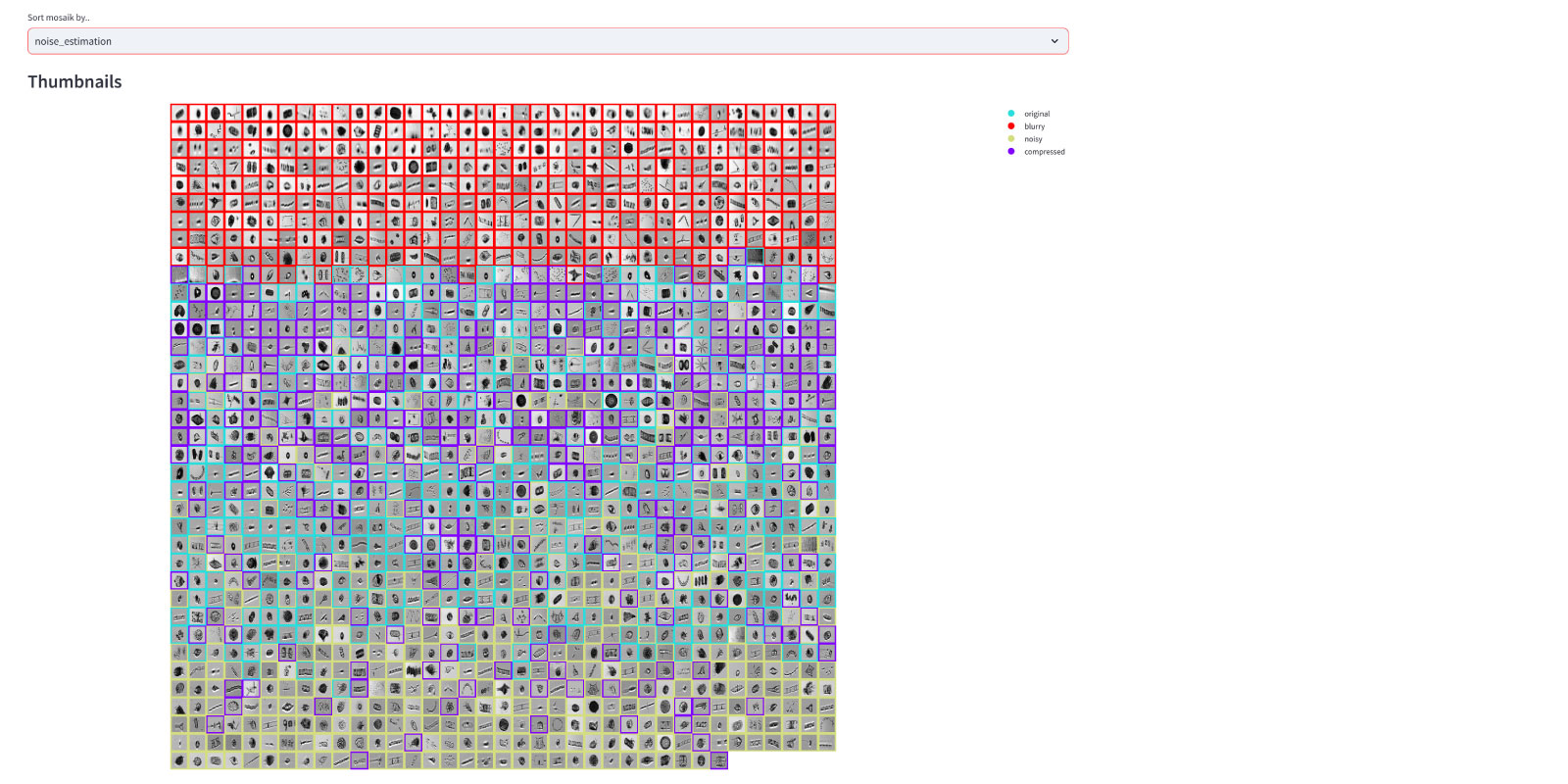

With today’s massive datasets, especially for AI models, efficient prevalidation isn’t just helpful, it’s essential. It saves time and frustration down the line by catching issues like incompatible formats, varying resolutions, artifacts, or mislabeled files early. Because I’m passionate about this, I wanted to both educate researchers through workshops and provide practical help via tools like PixelPatrol, ultimately building a community more adept at handling data effectively from the start.

Why is image prevalidation important for reproducible science?

Prevalidation helps transform your dataset from a potential ‘black box’ into a transparent, well-understood resource that you and others can trust and reuse. If your data harbors hidden inconsistencies – perhaps subtle artifacts, varying acquisition settings between batches, or inaccurate metadata – reproducing the analysis becomes incredibly difficult, if not impossible. Any conclusions drawn might unknowingly depend on these flaws.

Prevalidation is the systematic process of checking this integrity before deep analysis as well as documenting the data. Are file formats and metadata consistent? Are there outliers or quality issues that could bias results? By identifying, documenting, and potentially addressing these points early, you build your research on a solid, reliable foundation. This ensures conclusions are genuinely supported by the data, which is the very essence of reproducibility. It’s about achieving “Replicability through Data Knowledge”.

Can you share some real-world examples where improper image validation led to misleading scientific conclusions?

While researchers are diligent, subtle data flaws can easily be missed during routine work. Systematic prevalidation is specifically designed to catch these early. I’ve seen projects, including my own, where months of analysis were ultimately wasted because a fundamental data issue – something prevalidation could have flagged at the start – wasn’t discovered until very late.

For example, after months of analysis, I found that the expected relationship between experimental conditions didn’t hold—an issue prevalidation could have revealed immediately, likely due to image quality. I witnessed a case where faulty microscope parameters invalidated a batch of images, or found that outliers or duplicate images skewed the training of machine learning models. These situations cause immense frustration and waste valuable resources. While maybe not always leading to published misleading conclusions, they certainly compromise the quality and efficiency of the research process. Early validation could have prevented these detours, saved time, and led to higher quality results much sooner.

How does your work at the Engineering and Support Unit at MDC contribute to improving reproducibility in science?

Our unit fosters reproducibility in several key ways. Through direct support and consultation, we guide researchers beyond just analysis techniques, promoting best practices in data acquisition, management, quality control, and building reproducible analysis pipelines.

We also develop and share software solutions adhering to FAIR principles (Findable, Accessible, Interoperable, Reusable). We champion open-source code to make methods transparent and repeatable. Tools like PixelPatrol, our prevalidation prototype, directly address a community need for easier, standardized data checks. Our tool Album facilitates sharing and reusing digital solutions, preventing duplicated effort.

I especially enjoy educating scientists on these practices through workshops and direct interaction. It’s a mix of providing direct help, building better infrastructure, and fostering a culture of open, reproducible science.

Open science is a core value in your work. Why is transparency in data analysis and image processing so important?

Transparency transforms science from isolated efforts into a collaborative, evolving process. When you share not just results, but the underlying data, analysis pipelines, and quality checks, you invite scrutiny, validation, and extension by the community. This builds trust among scientists and the general population.

Processing steps or even minor parameter tweaks during acquisition or analysis can significantly impact outcomes. Thus, full visibility is crucial for confidence and full understanding of how results were derived. Sharing methods and code also accelerates discovery by allowing researchers to learn from and build upon each other’s work, rather than constantly reinventing the wheel, saving time and resources across the board.

What challenges do researchers face when trying to implement best practices in image validation, and how does your team support them?

Researchers often face a complex landscape. Firstly, there’s the knowledge gap – best practices aren’t always universally agreed upon (even experts debate formats and methods), and knowing what’s ‘good enough’ quality for a specific analysis requires both domain and technical expertise.

Secondly, time and resource constraints are very real. Under pressure to publish, dedicating time to thorough validation and data organization can feel like an extra burden, even though it pays off later.

Thirdly, the lack of standardized, user-friendly tools means scientists might resort to hand-crafting scripts or using tools they don’t fully understand, risking errors.

Our team helps researchers adopt open science and best practices through several key activities: raising awareness via workshops, talks, and documentation; providing direct support with consultations tailored to specific datasets and challenges; contributing through tool development, creating resources like PixelPatrol and Album, and guiding on using existing software effectively; and facilitating connections with imaging specialists or other experts when needed. Ultimately, we aim to make adopting these crucial practices less daunting and more integrated into the scientific workflow.

If there was one key message you’d want researchers to take away from your workshop, what would it be?

Science is often complex and messy; creating a ‘perfect’ dataset might be unrealistic. The key message isn’t about achieving perfection, but this: become the expert of your own dataset. Invest the time to understand its nuances, its strengths, and its limitations through prevalidation. This knowledge empowers you to choose the right analysis steps, maximize the value you extract, and produce more reliable results. Embrace transparency in this process – it strengthens your work and contributes to our collective scientific endeavor.

Thank you, Ella, for your insights!

Looking Ahead: The Future of Reproducible Science

As the scientific community continues to embrace open science, the Engineering and Support Unit at MDC is committed to continue advocating for reproducible research. From hosting workshops to developing next-generation computational tools, their work ensures that researchers can trust their data – and the conclusions drawn from it.

“Reproducibility isn’t just an obligation, it’s an opportunity,” Deborah emphasizes. “When we make our science more transparent, we don’t just improve our own research; we build trust in the entire scientific process.”

The Helmholtz Reproducibility Workshop provided a platform to discuss these important topics. The effort to improve scientific quality, however, is an ongoing process that benefits researchers, institutions, and, ultimately, society as a whole.

Links

Album, a decentralized platform for sharing digital scientific solutions