Research Unit DKFZ

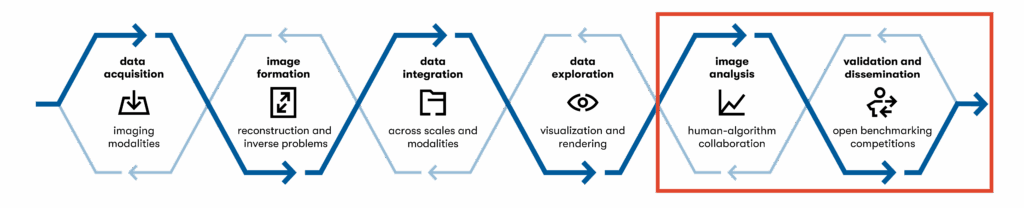

Image Analysis & Validation (DKFZ): we focus on the downstream stages of the imaging pipeline – specifically, the development, validation, and deployment of advanced AI-based methods for automated image analysis. These phases are critical for extracting high-level, domain-relevant information from complex imaging data. Our work addresses algorithmic challenges in interpreting, quantifying, and validating image-derived information, with the overarching goal of enabling trustworthy, robust, and generalizable AI-driven analysis.

We conduct interdisciplinary research in three core areas:

(1) automated image analysis, where we work to enhance the robustness and generalizability of AI methods across diverse and imperfect real-world datasets;

(2) human-machine interaction, which aims to integrate humans as active participants in AI development and deployment to ensure transparency, trust, and safety; and

(3) validation and benchmarking, where we lead efforts to develop and standardize validation practices and develop tools that enable reproducible and transparent assessments of algorithm performance.

By addressing these pivotal stages in the imaging pipeline, our mission is to advance the reliability and impact of AI-based image analysis across scientific and societal applications.

Publications

Other Research

Projects

Helmholtz Imaging Projects are granted to cross-disciplinary research teams that identify innovative research topics at the intersection of imaging and information & data science, initiate cross-cutting research collaborations, and thus underpin the growth of the Helmholtz Imaging network. These annual calls are based on the general concept for Helmholtz Imaging and are in line with the future topics of the Initiative and Networking Fund (INF).

Research Unit DESY

The Research Unit at DESY focuses on the early stages of the imaging pipeline, developing methods for advanced image reconstruction, including the optimization of measurements and the combination of classical methods with data-driven approaches.

Our goal is to drag out a maximal amount of (quantitative) information from given or designed measurements.

Research Unit MDC

The Research Unit at MDC focuses on integrating heterogeneous imaging data across modalities, scales, and time. We develop concepts and algorithms for generic processing, stitching, fusion, and visualization of large, high-dimensional datasets.

Our aim is to enable seamless analysis of complex imaging data without restrictions on the underlying modalities.

Publications

Helmholtz Imaging captures the world of science. Discover unique data sets, ready-to-use software tools, and top-level research papers. The platform’s output originates from our research groups as well as from projects funded by us, theses supervised by us and collaborations initiated through us. Altogether, this showcases the whole diversity of Helmholtz Imaging.